Snapshot

- Details of self-driving AI software explained

- Humanoid robot codenamed ‘Optimus’ in development

- Third Tesla event of its type

Tesla has shown off its latest self-driving developments at a big showcase event – including plans for a humanoid robot.

Alongside a look at the highly technical challenges of creating a self-driving car, Tesla CEO Elon Musk revealed plans for a ‘Tesla Bot’ at the firm’s AI Day – hosted in the US Thursday night/Friday morning Australian time.

The live-streamed, two-hour, showcase opened with a video demonstrating a Tesla operating in ‘Full Self-Driving’ mode – moving autonomously through suburban traffic. The car’s projected movements were telegraphed through its instrument cluster, which also showed key road items including signage and individual lanes.

While Telsa’s Autopilot system (which now faces a probe over the number of accidents it is said to have caused) currently performs some driving tasks automatically, it’s not yet fully autonomous.

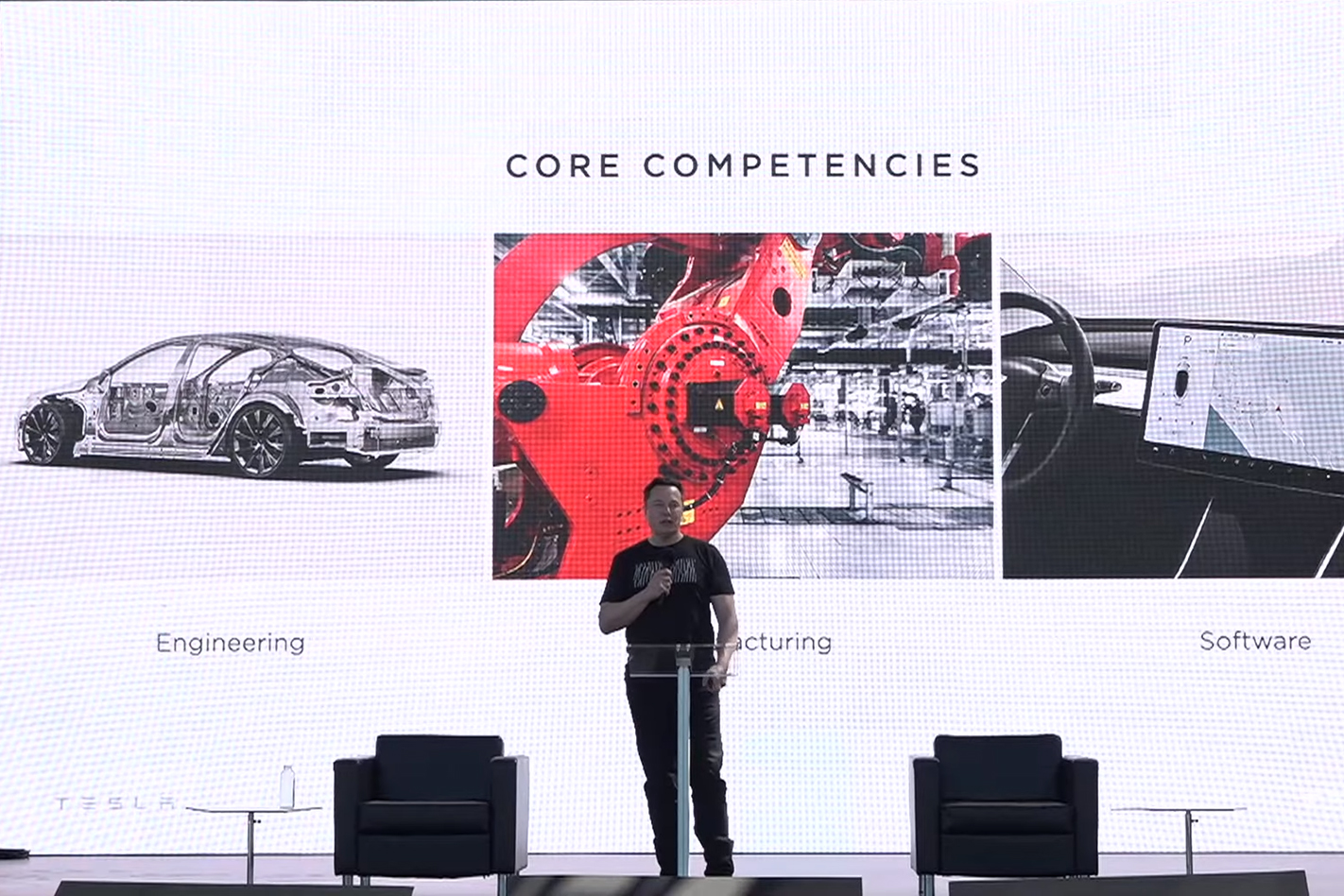

Musk was joined on-stage by a number of Tesla programmers and developers, who delved into the complex process of designing a self-driving ‘neural network’.

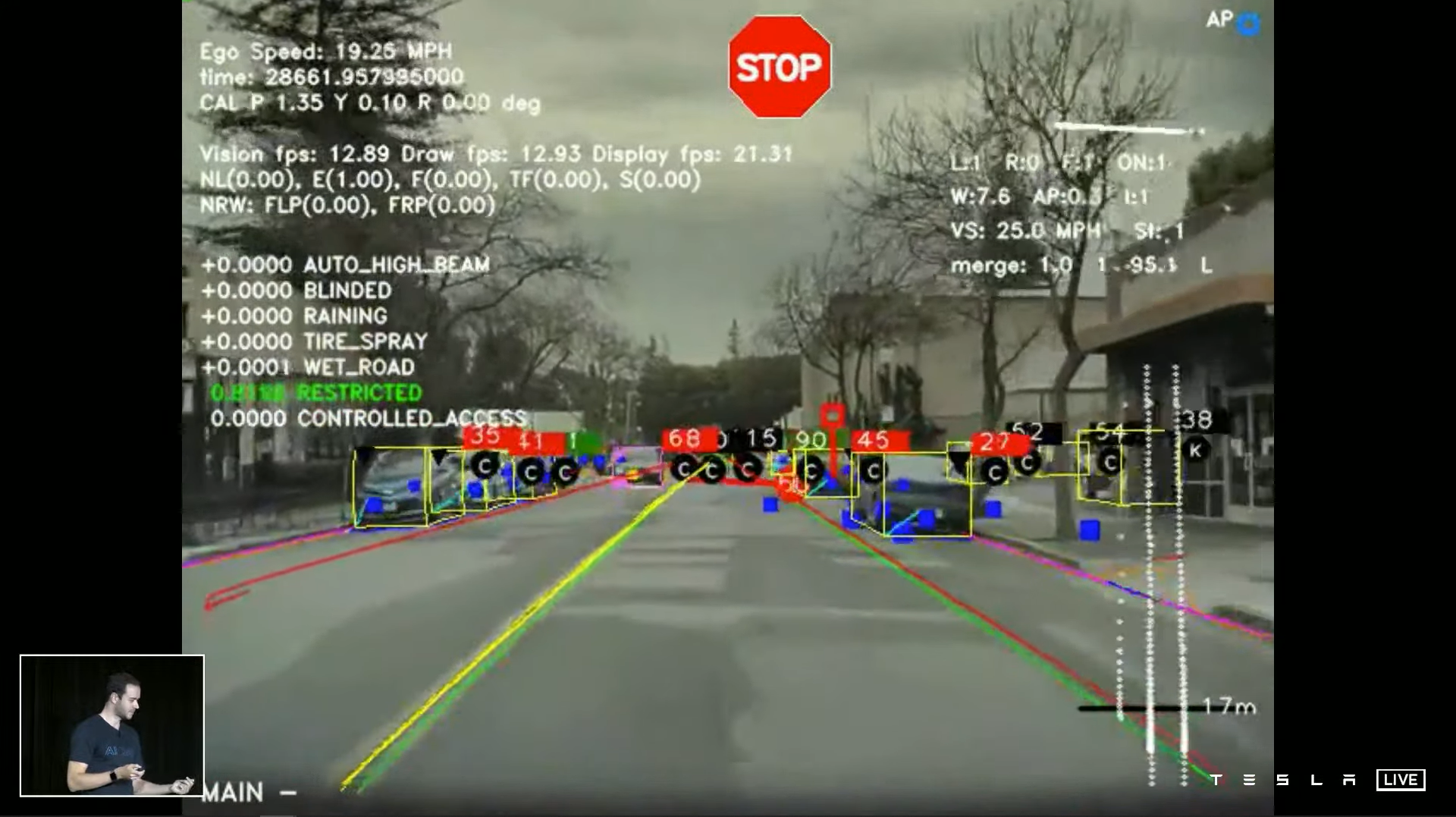

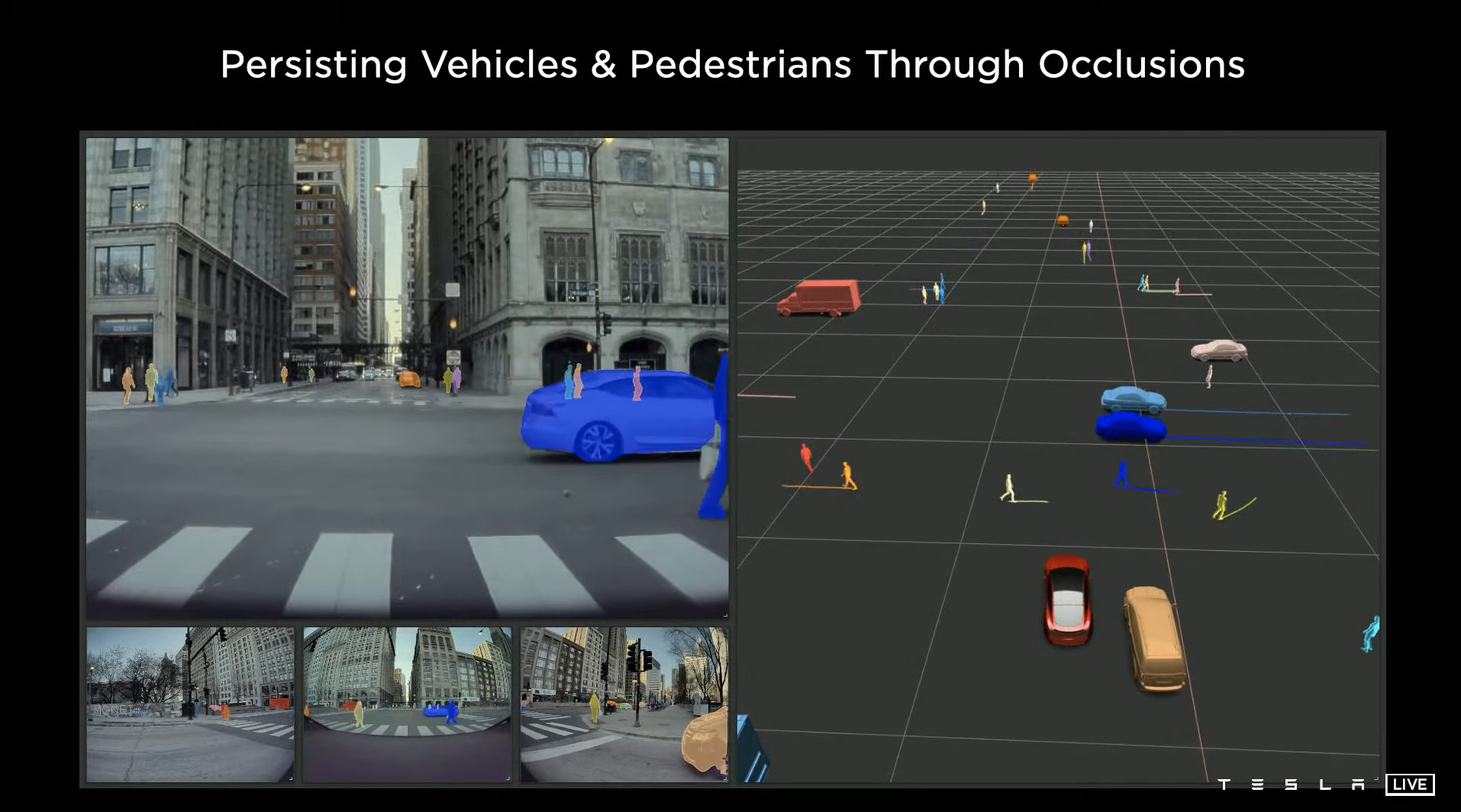

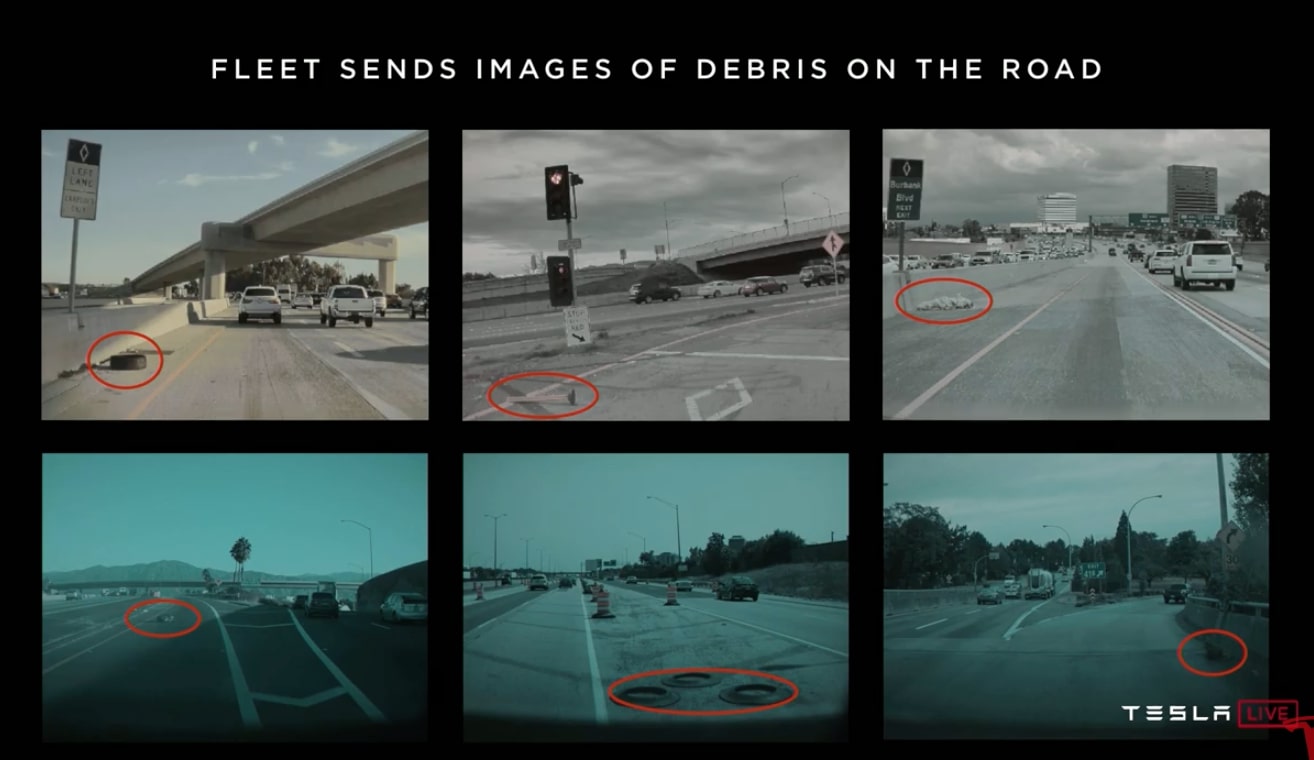

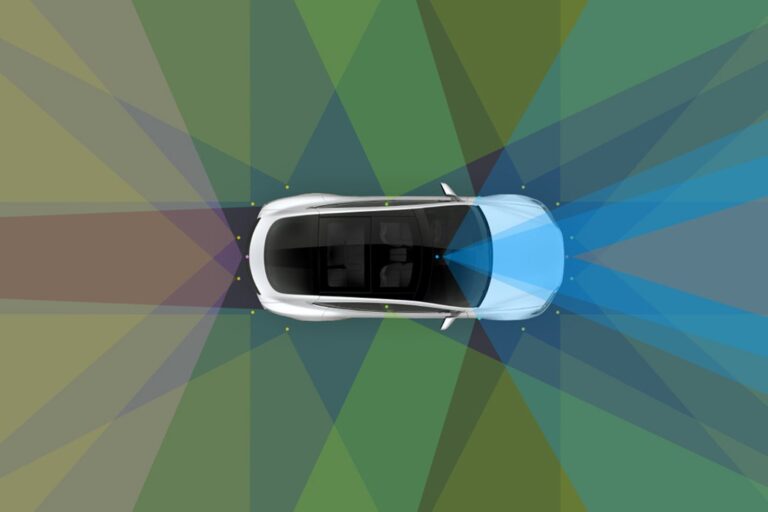

Essentially, Tesla’s system uses the combined input of eight in-car cameras to build a “four-dimensional vector space”.

This environment is populated with individually-identified objects: stop signs, other cars, pedestrians, and anything else which may affect the car’s movement.

Rather than assess every camera’s input on its own, the AI rolls each frame into a singular, spanning view.

Much of this ‘training’ is taking place with the aid of video-based simulations, allowing the program to refine its responses within varying road layouts and conditions.

For example, hundreds of training videos have featured snow spray which would impair the cameras’ ability to capture the surroundings. It’s being performed within a supercomputer system known as Dojo.

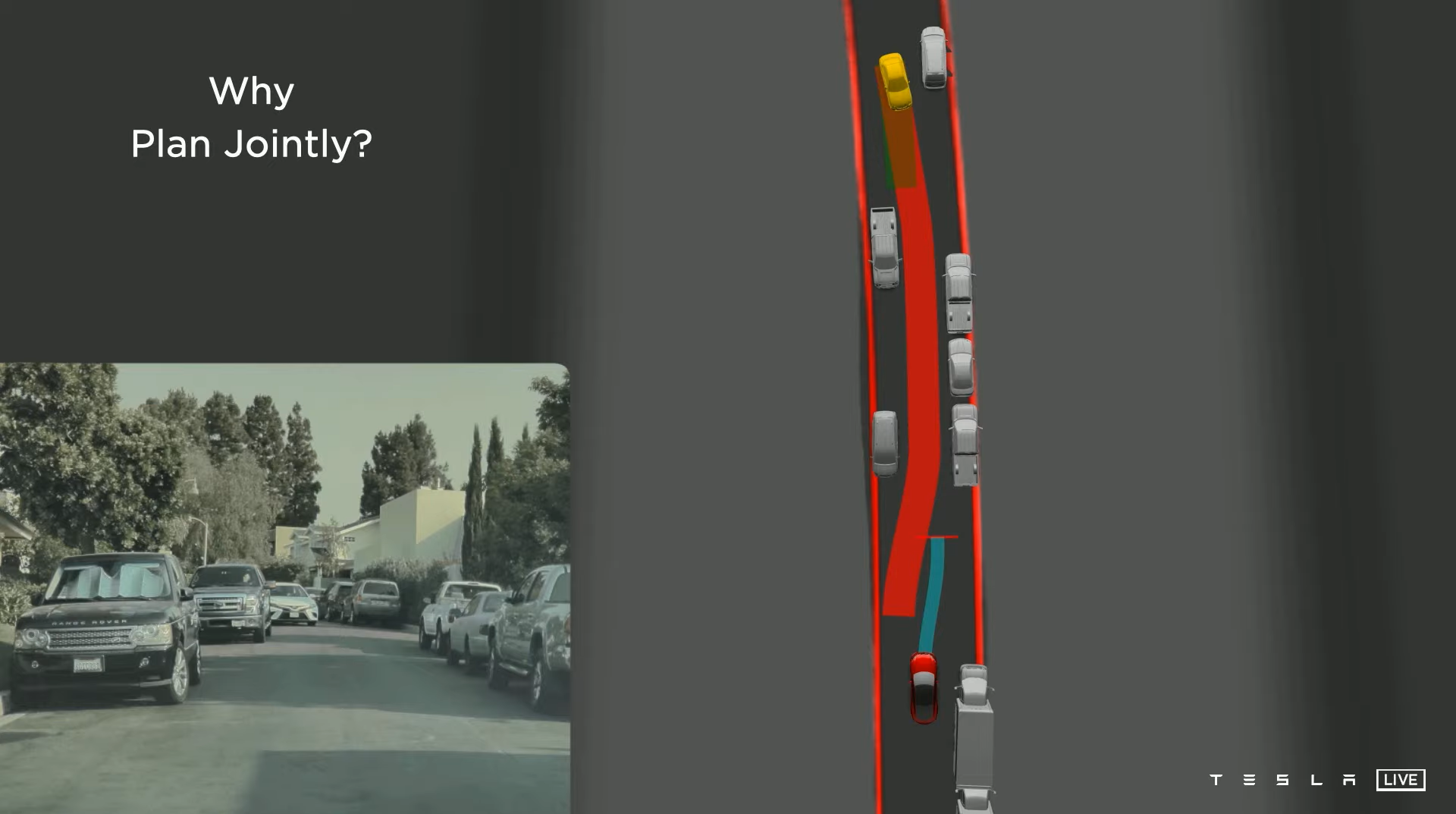

Autopilot software director Ashok Elluswamy also explained some of the AI’s reasoning processes, using the example of giving way on a narrow street.

Here, the car would assess the position and speed of an oncoming vehicle, as well as the location of any others parked on the roadside. It would then decide whether to give way or move forward based on the other driver’s predicted actions, watching for any movement changes.

Musk expressed that “safer than human” driving is entirely achievable with existing camera designs – though new technology will likely be pursued in the company’s future.

Road footage from around 50 countries is apparently being used to train the AI, though American roads have been the primary focus so far.

“Let’s solve it for the US, then we’ll extrapolate it to the rest of the world,” Musk said. “The prime directive is don’t ‘crash’, and that’s true for every country.”

When quizzed about Tesla AI possibly being forced to prioritise the safety of either occupants or pedestrians, Musk dismissed the issue as “somewhat of a false dichotomy” – citing the technology’s rapid processing methods.

“To a computer, everything is moving very slowly. Not that [a conflict] will never happen, but it will be very rare,” he said.

Both Musk and his development team labelled the AI’s predictive ability as “eerily good.” It’s said to already be capable of spotting objects like cyclists and pedestrians “beyond human” levels.

Musk was also asked about potential for open-source versions of Tesla’s AI software. “This is fundamentally, extremely expensive,” he answered. “Somehow that has to be paid for, unless people want to work for free.”

“But if other car companies want to licence it and use it in their cars, that would be cool.”

While there was plenty of discussion on the finer points of AI learning, it was Musk’s robot reveal that sparked the most interest.

“Our cars are semi-sentient robots on wheels,” Musk said. “Now we want to put that in a humanoid form.”

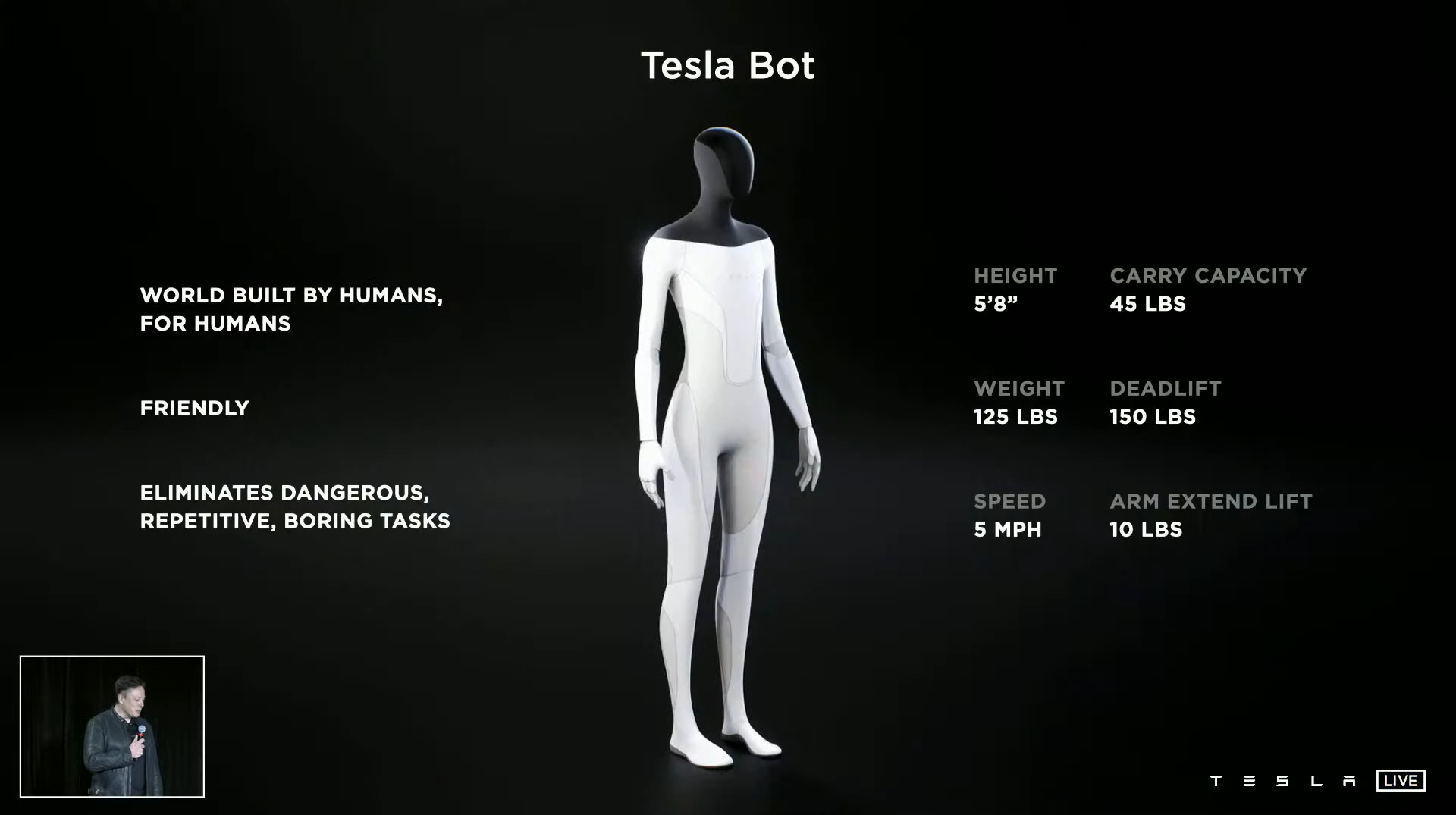

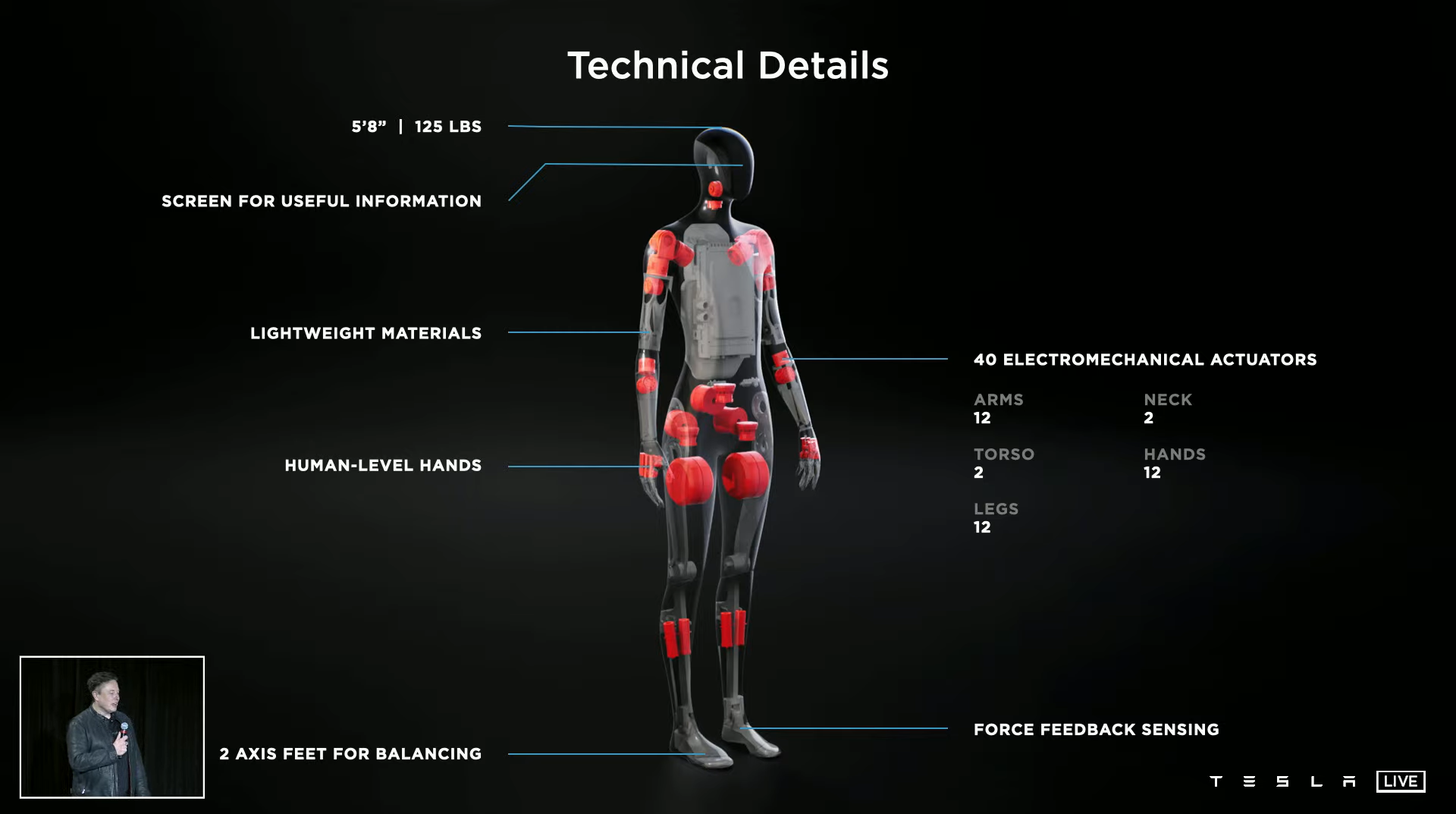

Codenamed ‘Optimus’, Tesla hopes to have the first prototype robot in action next year to show “[it is] much more than an electric car company”.

The first versions of the Tesla Bot will be intended for work that is “boring, repetitive, or dangerous” — jobs that “people would least like to do” according to Musk. One such role could be inside Tesla’s own “Alien Dreadnought” car factory.

The robot will feature a human-like design, though Musk seemed keen to distance it from any Terminator comparisons. “We certainly hope this does not feature in a dystopian sci-fi movie,” he joked. “At a physical level, you can run away from it and most likely overpower it.”

Tesla plans to use an eight-camera processing system like that in its cars, backed by similar machine learning software.

Ultimately, Musk says the Tesla Bot should be able to perform tasks based on verbal commands, without “line by line” code training. “I think we can do it,” he added.

AI Day was the third in a series of technology showcases hosted by Tesla.

‘Autonomy Day’ was held in 2019 at the company’s Pao Alto headquarters. At that event, Musk made a number of promises, notably that Tesla would “for sure” have one million self-driving taxis on the road by mid-2020.

When questioned on Twitter in April 2020 about the robo-taxi’s progress, Musk blamed “regulatory approval [as] the big unknown.” As of today, it has yet to debut in any capacity.

He also unveiled an advanced silicon chip for self-driving functionality, which is being built into every Model S, Model X and Model 3. Made by Samsung and capable of 36 trillion operations per second, Musk labelled it “the best chip in the world.”

Tesla followed up with ‘Battery Day’ at its Fremont Gigafactory factory last September, where Musk announced plans to reduce battery production costs in readiness for more affordable electric cars. A redesigned ‘tabless’ battery was placed on the agenda, increasing both range and power.

We recommend

-

Advice

AdviceAutonomous Cars: the different levels of autonomy explained

Where are we really on the road to autonomy? And with all these self-titled ‘self-driving’ systems around, what counts as a truly autonomous car?

-

News

NewsBuilding self-driving cars is harder than space exploration: Nissan tech chief

The head of Nissan’s self-driving division claims he has faced more challenges building autonomous cars than he experienced during 12 years at NASA

-

News

NewsTesla under investigation in US over 'misleading' marketing of full self-driving tech

Possible penalties, if the automaker is found to be misleading customers, include the suspension or revocation of autonomous vehicle deployment permits